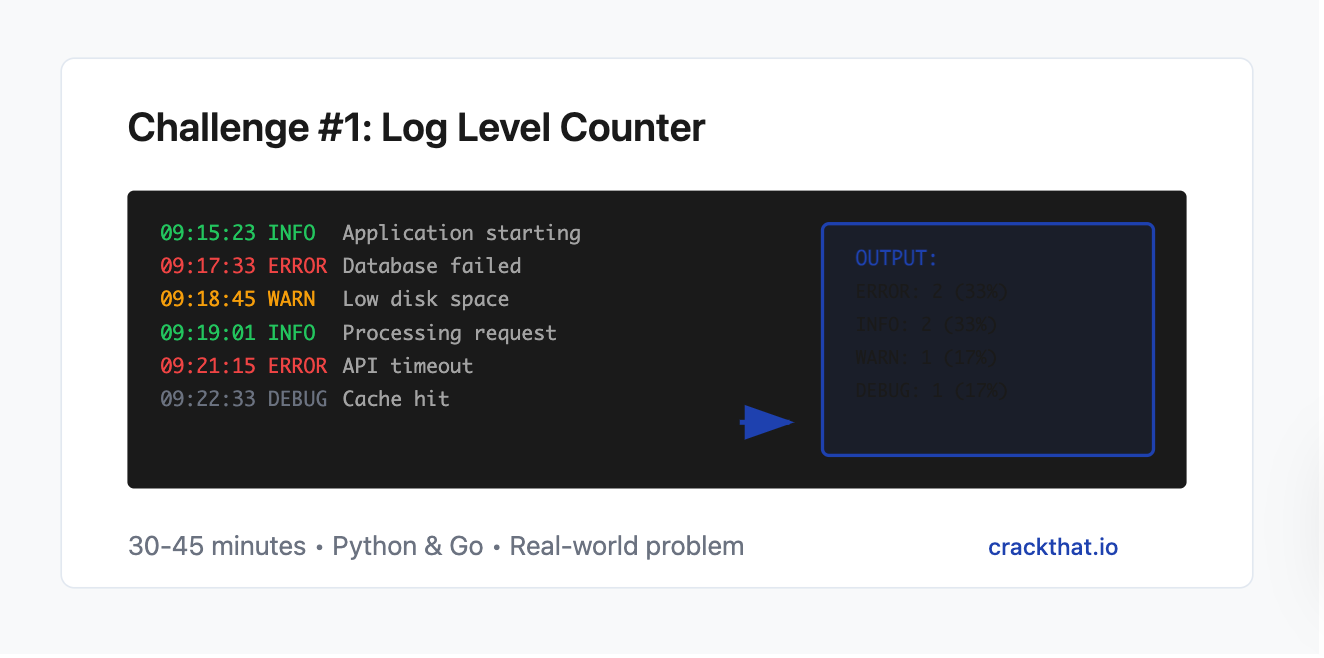

Coding Challenge #1: Log Level Counter

Your application is spitting out thousands of log entries daily, and your manager just asked: "How many errors did we have yesterday?"

You could manually grep through the logs, but there's got to be a better way. Plus, you suspect there might be patterns in when errors occur that could help prevent future issues.

Your Mission

Build a log analyzer that counts different log levels and gives you insights into your application's health.

Requirements

Your tool must:

Read log files and count occurrences of each log level (ERROR, WARN, INFO, DEBUG)

Display results sorted by frequency (highest first)

Handle case-insensitive matching

Support custom log formats via command-line arguments

Calculate percentage distribution of each log level

Show timestamps of first and last occurrence for each level

Sample Input (app.log)

2025-07-18 09:15:23 INFO Application starting up

2025-07-18 09:15:45 INFO Database connection established

2025-07-18 09:16:12 WARN Low disk space detected

2025-07-18 09:17:33 ERROR Failed to connect to payment gateway

2025-07-18 09:18:01 INFO Processing user request

2025-07-18 09:19:15 ERROR Database timeout occurred

2025-07-18 09:20:47 INFO User session created

2025-07-18 09:21:22 DEBUG Cache hit for user profile

2025-07-18 09:22:18 WARN Rate limit approaching

2025-07-18 09:23:44 ERROR Invalid API key provided

Expected Output

Log Analysis Report

===================

Total log entries: 10

Log Level Distribution:

-----------------------

ERROR: 3 (30.0%) | First: 09:17:33 | Last: 09:23:44

INFO: 3 (30.0%) | First: 09:15:23 | Last: 09:20:47

WARN: 2 (20.0%) | First: 09:16:12 | Last: 09:22:18

DEBUG: 1 (10.0%) | First: 09:21:22 | Last: 09:21:22

Summary: 🔴 3 errors detected - investigate payment gateway and API issues

Starter Code Templates

Python Approach

import re

import sys

from collections import defaultdict

from datetime import datetime

def parse_log_line(line):

"""Extract timestamp and log level from a log line"""

# Basic pattern: YYYY-MM-DD HH:MM:SS LEVEL message

pattern = r'(\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2})\s+(ERROR|WARN|INFO|DEBUG)'

match = re.search(pattern, line, re.IGNORECASE)

if match:

return {

'timestamp': match.group(1),

'level': match.group(2).upper()

}

return None

def analyze_logs(filename):

"""Main analysis function"""

# Your implementation here

pass

if __name__ == "__main__":

if len(sys.argv) != 2:

print("Usage: python log_analyzer.py <logfile>")

sys.exit(1)

analyze_logs(sys.argv[1])

Go Approach

package main

import (

"bufio"

"fmt"

"os"

"regexp"

"strings"

)

type LogEntry struct {

Timestamp string

Level string

}

func parseLogLine(line string) *LogEntry {

// Pattern: YYYY-MM-DD HH:MM:SS LEVEL message

pattern := regexp.MustCompile(`(\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2})\s+(ERROR|WARN|INFO|DEBUG)`)

matches := pattern.FindStringSubmatch(line)

if len(matches) == 3 {

return &LogEntry{

Timestamp: matches[1],

Level: strings.ToUpper(matches[2]),

}

}

return nil

}

func analyzeLogs(filename string) error {

// Your implementation here

return nil

}

func main() {

if len(os.Args) != 2 {

fmt.Println("Usage: go run log_analyzer.go <logfile>")

os.Exit(1)

}

if err := analyzeLogs(os.Args[1]); err != nil {

fmt.Printf("Error: %v\n", err)

os.Exit(1)

}

}

Test Your Solution

Save the sample input as

app.logRun your solution:

python log_analyzer.py app.logorgo run log_analyzer.go app.logVerify your output matches the expected format

Bonus Challenges

Add support for different timestamp formats

Include hourly distribution analysis

Generate alerts when error percentage exceeds threshold

Export results to JSON format

Add color-coded output for terminal display

Why This Challenge Matters

Log analysis is a daily task for developers and DevOps engineers. This challenge teaches:

Regular expressions for pattern matching

File I/O and text processing

Data aggregation and statistics

Command-line tool development

Real-world debugging skills